This article originally appeared in Digital CXO.

Insight Partners’ investments in a few dozen artificial intelligence (AI) and machine learning (ML) portfolio companies has enabled us to gain a broad perspective on the challenges these firms struggle with today, and how these challenges may evolve in the future. We were able to both share our experiences and learn from the AI software community at Insight’s inaugural ScaleUp:AI conference a couple of weeks back. The forum fostered many conversations in-person and online among more than 1,700 participants. We discussed timely topics relating to AI and ML, ranging from the current shortage of talent to concerns about trust and privacy.

Here are a few of the challenges discussed:

Explainability

Many popular types of AI systems powered by neural networks function as black boxes — they make predictions but can’t explain why. These systems can automate certain prediction tasks, but it’s hard for them to collaborate with humans since the humans don’t really understand what the system is doing. This makes it hard to test these systems. It makes it difficult for them to engender trust and meet regulatory requirements.

Explainable AI is particularly important as ML makes the transition from academic experiments to production systems, where the lack of explainability can have real-world consequences. Consequently, AI ScaleUps are already working on improving the adoption of more transparent AI models.

Zest AI, which sells AI software to assist credit underwriting for banks and credit unions, uses powerful techniques that allow Zest to see which variables (such as employment, bankruptcies or debt-to-income ratio) affect the credit rating the algorithm gives, and how important each variable is to the rating. Every incremental improvement in being able to explain the results of the selection adds up, building better trust between employees, end users and the AI system.

Causality

Humans are much better than ML systems today at explaining causality. Let’s say a ML system notices that airline seat prices are lower when airline occupancy rates are lower. It might conclude that lowering prices will cause lower occupancy. To any human looking that data, however, it will be obvious that the causality is the opposite: Lower seat occupancy rates are causing the lower prices.

Understanding causality is critical to making predictions. Humans can often see causality that a ML system will miss, using a causal world model to imagine counterfactual scenarios. Granted, humans can also misjudge causality, but when we’re right we recognize what software still misses or gets wrong.

Human expertise is thus needed to teach causal knowledge to AI systems. Startups like causaLens have emerged to work on this problem, although today teaching AI systems about causality is accomplished mostly via the direct human design of the ML system. Ideally we would capture the causal knowledge of human experts (think: specialist physicians, instead of ML engineers) and have AI systems able to understand the kind of information that is included in causal diagrams (as explained in Judea Pearl’s The Book of Why) to help us flesh out these causal directions.

MLOps

ML is an important ingredient in many AI systems, and ML isn’t just about the models – it’s a whole set of pipelines that let developers build, deploy, monitor and analyze ML.

There is an emerging next-generation MLOps stack that differs from the traditional data-analytics stack to meet ML-specific needs. Even the MLOps stacks for structured (tabular data used for loan origination) versus unstructured (image data like that used by Tractable, Overjet or Iterative Scopes for computer vision in insurance and healthcare) are diverging.

ML is notoriously resource-hungry. Building ML infrastructure that is scalable is turning out to be an important competitive advantage. Emerging MLOps stacks provide tools and services to monitor and manage algorithm-environment parameters such as latency, memory usage, CPU and GPU usage and more.

ML powered products can be qualitatively better than the competition because of more efficient infrastructure. For example, video creation provider Hour One can afford to let customers play around with their generated avatars for free, and only pay when they have a version that they like – instead of having to charge customers for each iteration.

Fully operationalizing AI without manual decision-making remains a challenge, especially at the edge. Building systems that can pair ML with optimization (e.g. routing, pricing, etc.) to take action on their own that can produce content with generative models such as OpenAI’s GPT-3 or DALL-E, or that can solve math word problems while “showing their work” such as Google Research’s Pathways Language Model (PaLM), requires lots of human expertise today. These systems often involve inference both at the edge and in central locations, with pre-processing at the edge before sending data to central locations for training. Most software platforms are not designed for this kind of architecture.

Privacy

Without sufficient protections, concerns around personal data and how it is used seems sure to limit some applications of AI. New techniques are emerging, such as federated multi-party computation, but these require expertise to implement today. Most of the public cloud vendors are investing heavily in the infrastructure to enable Confidential Computing, such as AWS Nitro Enclaves, Google Cloud Confidential Computing, and Azure Confidential Computing. Secure enclave hardware and associated easy-to-use confidential computing software platforms like Anjuna Security are seeing increasing adoption.

Because it is still possible for AI algorithms to identify a specific individual by name based on data that seem innocuous at first — things like gender, zip code or even accelerometer data — having an AI system that can mathematically prove that user privacy is maintained will be a must in many applications. Differential privacy provides a guarantee that outputs of an AI algorithm cannot be traced back to any single member of the dataset.

A myriad of other techniques exist to improve the privacy protection of AI systems, such as polymorphic encryption, federated learning and multiparty computation. Each of these techniques requires significant investment in time and capital.

Talent

Staying up to date with the latest technology requires recruiting the best talent. One of the main challenges AI initiatives face is that the demand for AI skills is outpacing supply in the marketplace. Attracting and keeping top talent may mean a different approach to culture than in a traditional software organization.

AI technical leaders often seek an academia-like culture, where they can both publish papers and stay up to date with the latest research – but where they still have equity upside, mission-oriented use cases and access to the large data, compute capacity and financial resources that universities can’t always provide. At many ScaleUps, AI and data-science engineers work separately from traditional software engineering teams because they have such a different organizational dynamic.

Computer vision and video AI need a different set of tools and talent.

Computer vision is one of the fastest growing subspecialties in AI, with applications across almost all industries. In a few domains it has become incredibly accurate, sometimes more so than human eyes and brains.

In healthcare, the use of computer vision to identify anomalies in patient scans is exploding — health tech companies use AI-assisted image recognition against data from CT scans, MRIs and x-rays and more. The Boston startup Iterative Scopes applies computer vision to colonoscopy videos in real time, vastly improving anomaly and polyp detection. Tractable can estimate how much it would cost to repair a damaged vehicle based on photos. And Overjet can tell whether a dental patient needs a crown or a filling by examining their X-rays.

The talent needed to build industrial scale, world-class computer-vision systems is still very difficult to find, and ScaleUps still need to build a lot of infrastructure themselves.

Data centric vs. model-centric AI can help to address the challenge associated with having small datasets. Quality of the data — not just quantity — is critical.

Some AI use cases have weak data-scale effects, such that obtaining more data doesn’t create product differentiation. Sometimes more or better data doesn’t improve AI performance much – in other words, there can be diminishing returns to marginal data. Data can also be easy to obtain or cheap to purchase — or accuracy improvements may not be as important for some use cases. It may still be useful to use AI in a product, but having more data may not provide a competitive advantage.

In other use cases, more data or higher-quality data can increase prediction accuracy, with marginal increases yielding significant increases in utility and strong barriers to entry. Going from 98% to 99.9% accuracy delivers a 20x reduction in error rate. For a self-driving car, that 1.9% improvement is invaluable.

Andrew Ng’s 2021 webinar on MLOps highlights a disparity: The ML pioneer and Landing AI CEO found that of 100 abstracts of recently published ML papers, only one focused on improving data quality instead of model quality.

Interesting techniques around semi-supervised learning and active learning can greatly increase the size and quantity of the training data set, improving accuracy and reducing bias. A startup called LatticeFlow is able to create performant AI models by auto-diagnosing the model and improving it with synthetic data.

More collective attention will need to be paid to dataset quality – e.g., obtaining better (not just more) examples of images of a manufacturing defect for model training. As AI use expands into new fields, ML architects face the problem of sparse datasets in unexplored domains. No amount of tinkering with an AI model will make up for a lack of high-quality training and feedback data.

AI ScaleUps need investors with very long-term time horizons.

Building an AI ScaleUp requires large up-front investments as companies struggle to define product-market fit, attract top talent and lay down a long-term foundation. Founders need to understand the relationship between their cash runway and the technical difficulty of the problem they’re trying to solve. Just as importantly, they need investors who understand the vision and commitment required for an AI ScaleUp to succeed.

Because AI is an iterative process, investors need to be willing to commit to the timeframe necessary for the engineering and data science behind the ScaleUp’s product to deliver value. As with any automation tech, the progression of an AI system is multi-step: At first the AI makes suggestions to humans, then it makes decisions with humans involved only in edge cases, and finally the automation reaches a level that doesn’t need a human touch at all.

Security needs to be built into AI systems from the beginning, since there are even more threat vectors associated with AI systems than there are with traditional software.

Like healthcare, the cybersecurity field is booming with opportunities for AI to innovate. ML algorithms designed for detecting intrusions, vulnerabilities and security threats are growing rapidly. However, just as important as using AI to secure a system is securing the AI itself. AI models are vulnerable to security attacks just like any other system is.

Because AI systems are so specialized for the domains they serve, there are a host of possible threat vectors. Understanding and identifying them is an essential part of securing the system.

A self-driving car, for example, is vulnerable to attacks that interfere with its computer vision and decision making, removing its ability to make safe-driving choices on the road. Because it’s difficult to access and exploit the car’s software remotely, attackers may turn to unusual methods, such as tampering with road signs.

Another avenue of attack is data poisoning — injecting mislabeled data into the model’s training set, leading to misclassification when it’s used in production. Models can also be vulnerable to inference attacks, where an attacker probes the model with different input data and examines the output to reverse-engineer what data was used to train it. A startup called Robust Intelligence built an “AI Firewall” that can detect model vulnerabilities and shield against inference issues like unseen categoricals.

Ongoing security breaches and failure to address vulnerabilities will lead to a loss of public trust in the ScaleUp’s product and its ability to keep user information safe.

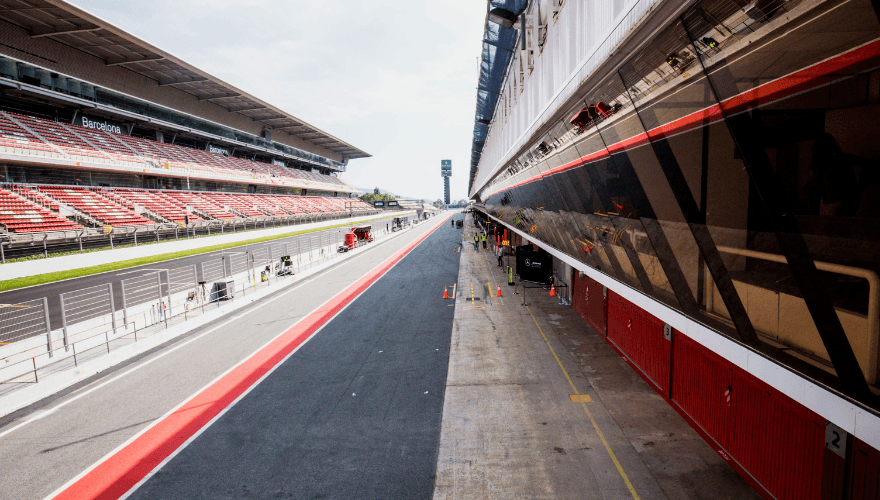

AI ScaleUps are reimagining the “shape” of the corporation in many industries.

The tech industry is all about innovation, and the shape and structure of a business is no exception. Many AI ScaleUps are going direct-to-consumer, following in the footsteps of Tesla, which sells cars without the auto industry’s traditional third-party dealers. Tesla created a relationship between driver and manufacturer that reflects the nature of the product being sold. Tesla customers aren’t just buying a car; they’re buying a hardware and software solution with AI included.

Hair care products brand Prose follows a similar model. Rather than relying on retailers to understand what drives customers’ buying behavior and what their hair-care concerns are, Prose analyzes each customer’s data from a detailed questionnaire on lifestyle, climate, diet, health and fitness. This data is fed into an AI model to identify a customized formulation for each customer. The company then uses customer feedback to iterate on this personalized product formulation.

This direct-to-consumer approach cuts out the middleman for a more important reason than cash flow: It reduces the risk of vendors abstracting away crucial data from which AI-equipped companies can better understand their customers — both in aggregate and one-to-one.

AI in 2022

AI will likely have profound influence across many sectors. How we overcome the associated technology hurdles described above will make or break our responses to crises and challenges that lie ahead. For example, as the effects of the pandemic began to wane, many companies didn’t unplug the huge fixed costs they had made to accommodate remote workers and collaboration technology. With the uptick of inflation, we may see a similar phenomenon. One way we can address supply and labor shortages is to intentionally slow down the economy using techniques such as substantially higher real interest rates. But another, less harmful approach could be to use AI to dramatically improve productivity.

Disclaimer: Insight Partners has invested in the following companies listed in this article: Zest AI, Tractable, Overjet, Iterative Scopes, Hour One, Anjuna Security, Landing AI and Prose.