AI is a rapidly developing sector that Insight has invested more than $4B since 2014. We’re currently tracking 23,315 AI/ML startups and ScaleUps. Naturally, our team has seen a lot, and the discussions around this topic generate a lot of opinions.

Here’s a look behind the curtain into some of what we’re reading, sharing, and discussing across the team at Insight lately. Think I missed something? Email me and tell me all about it. And don’t forget to save the date for ScaleUp:AI in October.

Google’s all revved up and ready to go

As teased in the title, Google I/O happened yesterday. And what’s more punk rock than a legacy tech giant up on stage, fighting for a comeback as its market share gets eaten up by both agile upstarts and its longtime search rival? Per Ars Technica: “Google I/O is clearly the ‘We’re extremely jealous of ChatGPT’ show, and the first hour was packed with Google announcing generative AI features for every input box the company has control over.” Saving you a click:

- Generative AI is coming to Google Search.

- Generative AI chatbot Bard, which is Its Own Thing and not part of Google Search, is available to everyone now.

- Generative AI will be powered by PaLM 2, which will introduce multilingualism (over 100 languages), reasoning (datasets including scientific papers and improved capabilities with math and logic), and coding.

In short:

Word of the week: Multimodal

The most unexpected news to drop this week was more open-source innovation from Meta re: multimodal embedding.

Wow, @MetaAI is on open-source steroids since Llama.

ImageBind: Meta’s latest multimodal embedding, covering not only the usual suspects (text, image, audio), but also depth, thermal (infrared), and IMU signals!

OpenAI Embedding is the foundation for AI-powered search and… pic.twitter.com/aKhZn78EZI

— Jim Fan (@DrJimFan) May 9, 2023

Read the research: “ImageBind: One Embedding Space To Bind Them All.”

But that’s not all, folks: Huggingface demonstrates how impressive multimodal capability is in this transformers agent update: “In short, it provides a natural language API on top of transformers: we define a set of curated tools and design an agent to interpret natural language and to use these tools. It is extensible by design; we curated some relevant tools, but we’ll show you how the system can be extended easily to use any tool developed by the community.”

Meta Open Sources Another AI Model, Moats and Open Source, Apple and Meta

From Stratechery, a killer roundup that touches on two of the hottest topics we’re seeing in AI discussions right now: moats (and specifically, where they will occur in open- versus closed-source innovation), and multimodal (specifically, the fourth and most recent AI-related release from Meta).

Bits and bots

- Humans can’t determine explainability, but maybe other LLMs can, via OpenAI.

- Good listen from No Priors: Personalizing AI Models with Kelvin Guu, Senior Staff Research Scientist, Google Brain

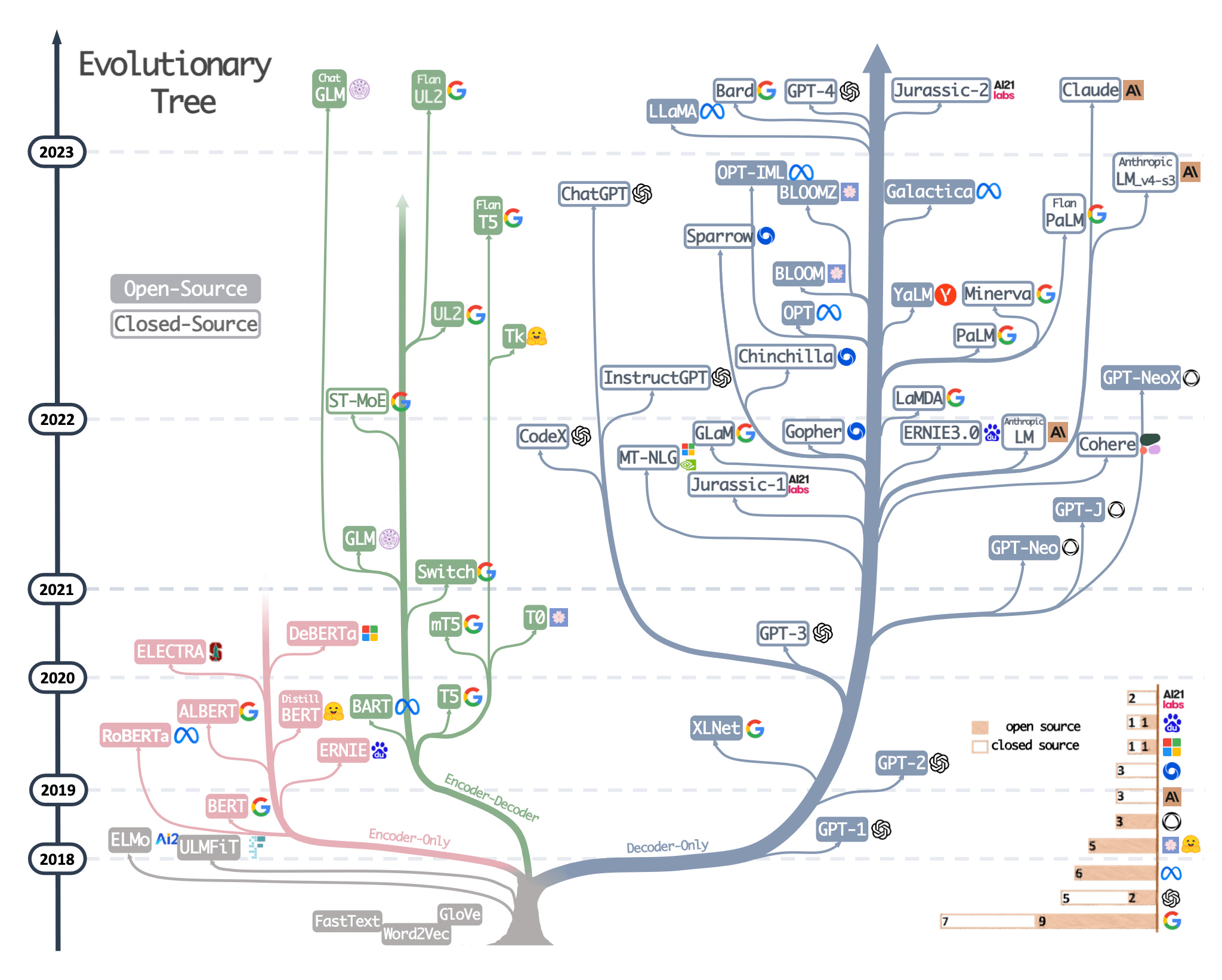

- Check out this cool visualization of the LLM space. Source: Harnessing the Power of LLMs in Practice: A Survey on ChatGPT and Beyond

- Anthropic expands their chatbot (Claude) context window to ~75k words — which is roughly the length of the first Harry Potter book.

Introducing 100K Context Windows! We’ve expanded Claude’s context window to 100,000 tokens of text, corresponding to around 75K words. Submit hundreds of pages of materials for Claude to digest and analyze. Conversations with Claude can go on for hours or days. pic.twitter.com/4WLEp7ou7U

— Anthropic (@AnthropicAI) May 11, 2023

- In response to the leaked “Google has no moat” memo I posted about last week, I present you with *yet another* AI MEGATHREAD in response:

you people love nothing more than a “leaked internal google memo”

and your breathless “no moats” retweets have compelled me to set you straight with another AI-obsessed megathread 😉🧵

tl;dr: we’ll see everything, everywhere, all at once, but OpenAI (& Google) have real moats!

— Nathan Labenz (@labenz) May 6, 2023

- Ashton Kutcher, best remembered by this author as the star of Dude, Where’s My Car? (and a mediocre Steve Jobs biopic that I forgot about) reportedly raised a nearly $250M AI fund for Sound Ventures in just five weeks. Does celebrity interest mean we’ve jumped the shark from early adoption to more mainstream awareness?

From the Insight portfolio

- Nice overall generative AI explainer from Insight portfolio company Assembly AI.

- “We’re excited to announce the launch of our AI Content Generator — available in Optimizely’s Content Marketing Platform!” (via LinkedIn)

Editor’s note: Articles are sourced from an ongoing, internal Insight AI/Data Teams chat discussion and curated, written, and editorialized by Insight’s VP of Content and Thought Leadership, Jen Jordan, a real human. (Though maybe not for long?)

Insight has invested in Assembly AI and Optimizely.

Image credit: Google Deepmind via Unsplash. “Unsupervised Learning: Depiction of patterns and connections between objects representing of one method in which AI systems learns from their own experiences. Artist: Vincent Schwenk.”